Here is a little experiment in which I just wanted to try to reproduce the results Jean-Philippe Raymond published in this post. I also looked at the example YAML specification provided by Vincent Dumoulin. Since I was not sure about the role of the composite layer found in Vincent’s example, contrarily to Jean-Philippe I included it in my model specifications. Comparing with Jean-Philippe’s results, the results I got do not suggest that it makes a difference. On top of that I put a regular hidden layer. I trained two models, one with a 250 units regular hidden layer, and the other with 500 units. The network with 250 units spent more time training than the one with 500 units. This seems to be linked to the termination criterion (monitoring validation objective) and the randomness introduced by stochastic gradient descent. The best MSE is approximately 0.44 for both models, which is close to the results of Jean-Philippe Raymond.

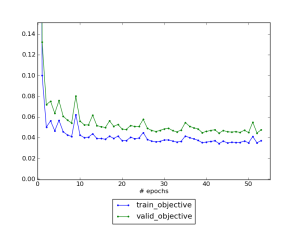

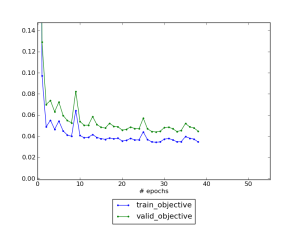

Here are two graphs showing the progress in the training of both models.

250 units

500 units

The code and results are available on Github.